Getting Started with Offline Doctor

Welcome to Offline Doctor! This guide will help you set up and start using our AI-powered medical assistant. Offline Doctor provides private, secure medical guidance without requiring an internet connection.

🔍 System Requirements

Hardware Requirements

- CPU: 2 cores minimum, 4+ cores recommended

- RAM: 4GB minimum, 8GB+ recommended

- Storage: 2GB free space minimum

- Graphics: Basic GPU (integrated graphics sufficient)

Software Prerequisites

- Operating System

- Windows 10/11 (64-bit)

- macOS 10.15 or later

- Linux (Ubuntu 20.04+, Fedora 34+, or similar)

- Required Software

🚀 Installation

Quick Setup (Recommended)

- Clone the Repository

git clone https://github.com/lpolish/offlinedoctor.git cd offlinedoctor -

Run Automated Setup

Choose your platform:

Linux/macOS:

./setup.shWindows:

setup.batThis script will:

- Install all dependencies

- Set up the Python environment

- Configure Ollama

- Install required AI models

- Create desktop shortcuts

- Start Offline Doctor

npm start

Manual Installation

If you prefer more control over the installation process:

- Install Dependencies

git clone https://github.com/lpolish/offlinedoctor.git cd offlinedoctor npm install - Configure Python Backend

cd backend python3 -m venv venv # Activate virtual environment # On Linux/macOS: source venv/bin/activate # On Windows: # venv\Scripts\activate pip install -r requirements.txt deactivate cd .. -

Install Ollama

Linux/macOS:

curl -fsSL https://ollama.ai/install.sh | shWindows:

- Download from Ollama’s website

- Run the installer

- Follow the setup wizard

- Download AI Models

ollama pull llama2

🎯 Initial Configuration

First Launch

- Start the Application

- Run

npm startor use desktop shortcut - Wait for initialization (1-2 minutes)

- Confirm all services are running

- Run

- Essential Settings

- Open Settings tab

- Select preferred AI model

- Configure privacy options

- Set up data storage preferences

Privacy Settings

- Data Storage

- Choose storage location

- Set retention period

- Configure backup options

- Anonymization

- Enable/disable history

- Set data anonymization level

- Configure export options

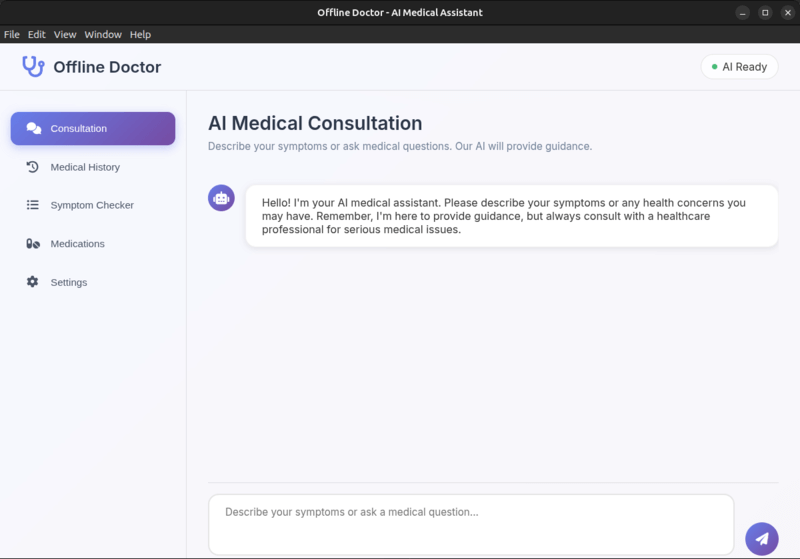

💡 Basic Usage

Medical Consultation

- Start a Consultation

- Click “Consultation” tab

- Type your medical question

- Provide relevant details

- Review AI response

- Best Practices

- Be specific about symptoms

- Include duration and severity

- Mention relevant history

- Ask follow-up questions

Symptom Checker

- Check Symptoms

- Open Symptom Checker

- Select all relevant symptoms

- Add duration and severity

- Get AI assessment

- Track History

- Save important consultations

- Monitor symptom progression

- Export records if needed

🔧 Troubleshooting

Common Issues

- Application Won’t Start

- Check Node.js installation

- Verify Python environment

- Confirm Ollama is running

- Check system requirements

- AI Model Issues

# Verify Ollama service ps aux | grep ollama # Restart Ollama ollama serve # Reinstall model if needed ollama pull llama2 - Backend Problems

# Reset Python environment cd backend rm -rf venv python3 -m venv venv source venv/bin/activate # On Windows: venv\Scripts\activate pip install -r requirements.txt

Getting Help

- Check Documentation

- Visit FAQ

- Join Discord

- Report Issues

📚 Next Steps

- Explore Features

- Read our Documentation

- Try the Tutorials

- Check Example Uses

- Join Community

- Join Discussions

- Follow Development

- Share Feedback

- Advanced Usage

- Custom AI Models

- API Integration

- Development Guide

Offline Doctor

Offline Doctor